When a Limp Isn’t Just a Sprain in Adolescents

A timely X-ray can save young hips

Slipped Capital Femoral Epiphysis (SCFE) is the most common adolescent hip disorder. It occurs when the ball at the top of the thigh bone (femoral head) slips off the neck of the bone through the growth plate (physis). A bit like an ice cream sliding off a cone… Dr Ryno du Plessis, a renowned orthopaedic and joint replacement surgeon in the Western Cape, talks about what it is and why it is often misdiagnosed.

SCFE usually happens during growth spurts in children aged 9 to 16 years and is more common in boys and in children with obesity, endocrine disorders, or other risk factors.

Why is this problem often missed?

Despite its frequency, SCFE is routinely misdiagnosed or diagnosed late – unfortunately, sometimes months after symptoms start. Studies show that over 50% of SCFE cases are not diagnosed at the first medical visit.

Here’s why:

- Pain felt in the knee or thigh: Physicians often focus on the wrong joint and the hip is never X-rayed

- Labeled as a groin strain: Adolescents in sports may be diagnosed with muscle strains or ‘growing pains’

- Symptoms develop gradually: Children may limp without severe pain, leading to delayed concern

- Physiotherapy prescribed early: Instead of imaging – patients are referred to physio – delaying diagnosis

- Lack of hip-specific X-rays: It requires a frog-leg lateral X-ray.

Why does delay matter?

The longer the slip is left untreated, the more serious the outcome. Every week or month of delay increases the severity of the deformity, often silently.

Late diagnosis risks:

- More severe deformity

- Loss of bloody supply to the femoral head. This is known as avascular necrosis and can lead to pain, limited movement and eventually, hip collapse and osteoarthritis

- Early-onset hip arthritis

- Complex surgery

Children diagnosed early often need just one screw to stabilise the hip. Those who are diagnosed late may face major reconstructive surgery, longer recovery, and reduced hip function for life.

Red flags for parents, teachers and coaches

If you notice any of the following signs in a child or teen – especially those who are overweight – take it seriously and ask for a hip X-ray:

- Limping for more than a week

- Complaints of pain in the knee, thigh, groin, or buttock

- Walking with the foot turned outwards

- Stiffness or loss of motion in the hip

- Sudden inability to walk or stand after a minor stumble (may indicate an unstable SCFE)

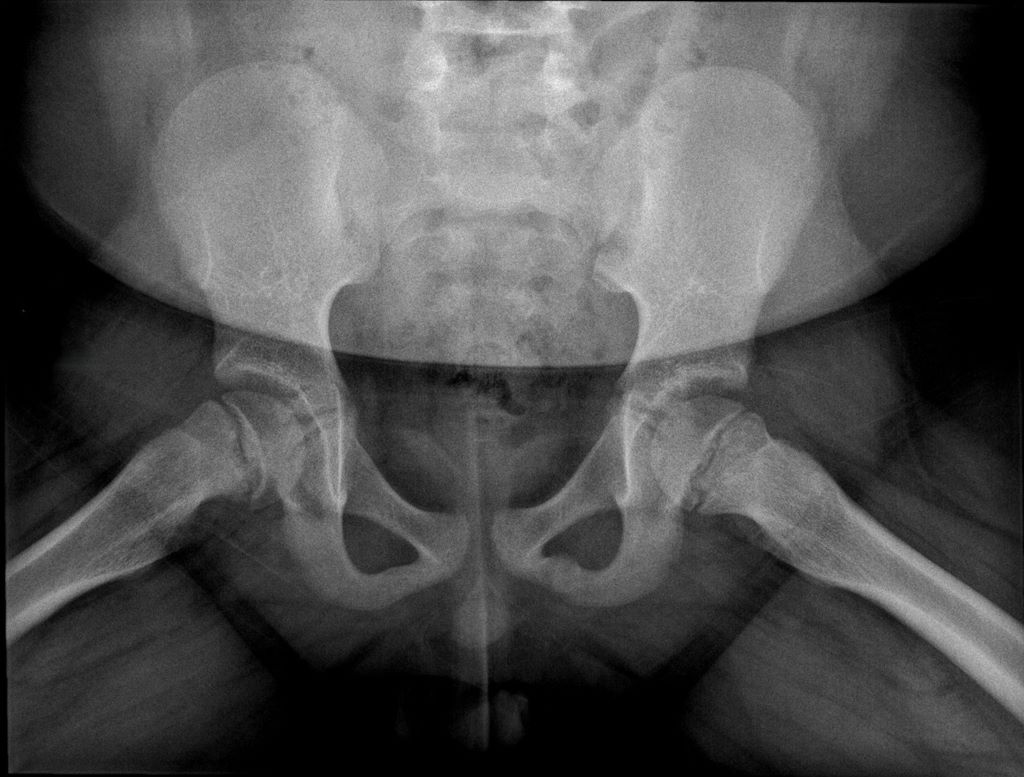

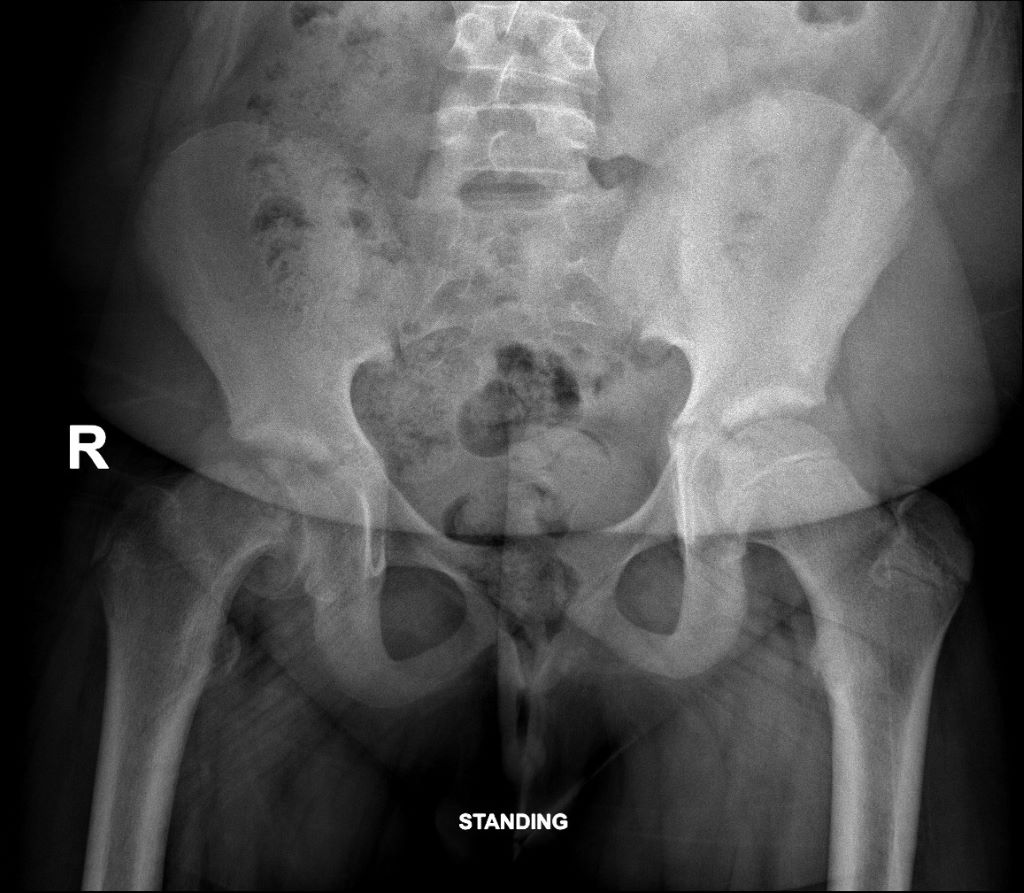

Radiology – diagnostic challenges

Dr Jaco Greyling, a radiologist from SCP Radiology, says SCFE diagnoses can be delayed due to several factors, including

- Hip X-rays not ordered by the initial healthcare provider (eg, GP or physiotherapist)

- Only a single anterior-posterior pelvis projection is performed, whereas a frog-leg lateral view must also be specifically requested by the referring physician. Radiologists should ensure the child returns for this view if it was not initially ordered

- Findings in the pre-slip phase are subtle and may be missed, even by experienced radiologists

He says, ’the recommended imaging is an anterior-posterior pelvic view which shows malalignment and widening of the growth plate and a frog-leg lateral view, the most sensitive for detecting early or subtle slips.’

‘Key radiological signs,’ says Dr Greyling are:

- Widening of the growth plate

- Loss of height of the femoral head

- Loss of alignment of the anatomical lines that intersect with the femoral head

- ‘Melting ice cream sign’ slipping off the femoral neck at the growth plate (epiphysis).

Follow-up recommendations:

Dr Greyling suggests repeat imaging within two weeks if symptoms persist, and an early referral to a paediatric orthopaedic surgeon and an MRI for patients with risk factors and ongoing pain.

Who’s at risk?

- Children aged 9-16 years

- Boys are at greater risk than girls

- BMI in the overweight/obese range

- Family history of hip disorders

- Endocrine disorders: Hypothyroidism, growth hormone treatment, kidney disease

Treatment

Early SCFE is usually treated with in-situ fixation using one or two screws. The goal is to stabilise the rounded end of a long bone to prevent further slippage.

In cases where both hips are at risk (especially in young or overweight patients), pinning of the opposite hip as well is sometimes recommended to prevent it from occurring.

Severe or late cases have a high risk of AVN, which is the death of bone tissue caused by a disruption in its blood supply, leading to pain, stiffness, and potential bone collapse or joint destruction over time and permanent disability.

The take-home message

SCFE is treatable and preventable if recognised early.

If a child has an unexplained limp, especially with thigh or knee pain, don’t assume it’s just a strain. Ask the doctor directly: “Could this be SCFE? Should we get hip X-rays done?”