South Africa, PATH, and Wellcome Launch World’s First AI Framework for Mental Health at G20 Social Summit

As artificial intelligence (AI) increasingly enters the mental health space, from therapy chatbots to diagnostic tools, the world faces a critical question: can AI expand access to care without putting people at risk?

At the G20 Social Summit in Johannesburg, South Africa announced a landmark national effort to answer that question. The South African Health Products Regulatory Authority (SAHPRA) and PATH, with funding from Wellcome, have launched the Comprehensive AI Regulation and Evaluation for Mental Health (CARE MH) program to develop the world’s first regulatory framework for artificial intelligence in mental health.

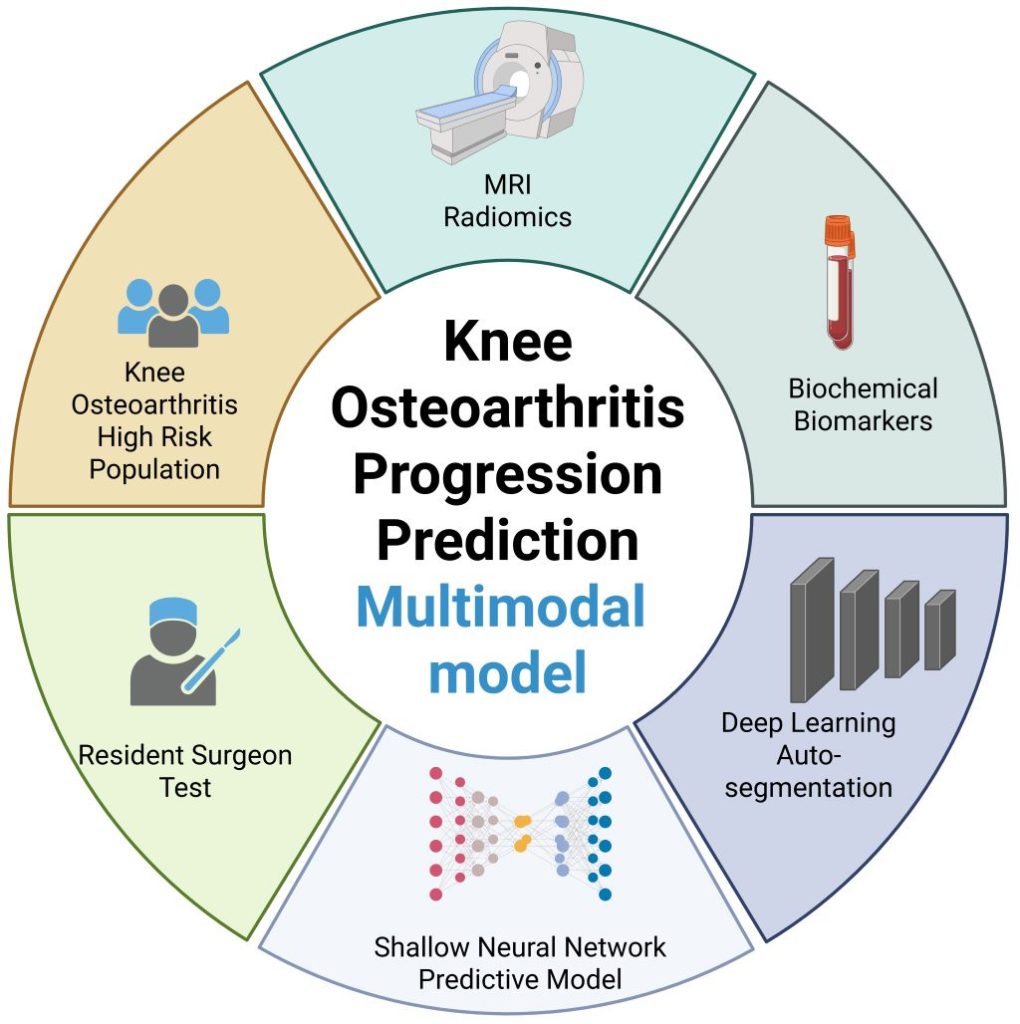

CARE MH will establish a science-based and ethically robust regulatory framework that describes how AI tools need to be evaluated for safety, inclusivity, and effectiveness before they can be given market authorization and made available to potential service users. It aims to strengthen trust in digital health innovation and will serve as a model for other countries seeking to strike a balance between innovation and oversight.

“You wouldn’t give your child or loved one a vaccine or drug that hadn’t been tested or evaluated for safety,” saidBilal Mateen, Chief AI Officer at PATH. “We’re working to bring that same standard of rigorous evaluation to AI tools in mental health, because trust must be earned, not assumed.”

The framework will be developed and tested in South Africa, with the intention of extending its application across the African continent and to international partners.

“SAHPRA is proud to lead the development of Africa’s first regulatory framework for AI in mental health linked directly to market authorization,” said Christelna Reynecke, Chief Operations Officer of SAHPRA. “Our true goal is even more ambitious, though; we want to create a regulatory environment for AI4health in general, one that keeps pace with innovation, grounded in scientific rigor, ethical oversight, and public accountability.”

“Millions of people across the globe are being held back by mental health problems, which are projected to become the world’s biggest health burden by 2030,” said Professor Miranda Wolpert MBE, Director of Mental Health at Wellcome. “CARE MH is a vital step toward ensuring that AI technologies in this space are safe, effective, and equitable.”

The goal is simple: help more people, safely.

Through CARE MH, the partners behind this initiative are setting the foundation for the next generation of ethical, evidence-based AI in mental health. Supported by global experts from the following institutions: Audere Africa, African Health Research Institute, the UK’s Centre for Excellence in Regulatory Science and Innovation for AI & Digital Health, the UK Medicines and Healthcare products Regulatory Agency, University of Birmingham, University of Washington, and the Wits Health Consortium, CARE MH is built to protect and empower people everywhere.